Introduction

Microsoft Fabric, the unified environment launched in 2023 is now central to Microsoft’s data strategy.

What is the Microsoft Fabric Offering? Who are Microsoft’s competition to this software? What other unified environments are available. This blog aims to demystify some of these areas.

What is the Microsoft Fabric Offering?

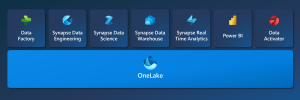

Microsoft Fabric brings together multiple components into a single environment. Lunched in 2023 to much fanfare, it offers a services for data engineering, data science, data warehousing, real-time analytics, and business intelligence. This offering includes Power BI, Data Factory and synapse data warehousing. The components are build on OneLake, with data stores in Delta format.

Microsoft Fabric Components?

- Data Factory – This combines the Azure data factory and Power Query enabling the construction of dataflows and data pipelines.

- Synapse Data Engineering – This provides a Spark platform with great authoring experiences. This enables data engineers to perform large scale data transformation and democratize data through the lakehouse. Microsoft Fabric Spark’s integration with Data Factory enables spark jobs to be scheduled and orchestrated through notebooks.

- Synapse Data Science – This enables you to build, deploy, and operationalize machine learning models. It integrates with Azure Machine Learning to provide built-in experiment tracking and model registry.

- Synapse Real Time Analytics – Through ingestion through event streams or processing in KQL Through ingestion through event streams or processing in KQL (Kusto Query Language). Fabric is designed to work with real time data.

- Power BI – Fabric contains a cloud version of this Business intelligence Platform.

- Data Activator – This monitors data in Power BI reports and Event streams items. When data hits certain thresholds or matches other patterns, it automatically takes appropriate action. The actions include alerting users or kicking off Power Automate workflows. It is currently in preview.

- OneLake – Data Warehouse experience provides industry leading SQL performance and scale. OneLakeseparates compute from storage, enabling independent scaling of both the components. It stores data in the open Delta Lake format.

Delta Format

Delta format is a file storage technology store modifications to files rather than whole amended files. These files reduce the amount of storage space required and the amount of network bandwidth used. The format offers ACID (Atomicity, Consistency, Isolation, Durability) transactions , schema evolution, time travel, and data versioning. It ensures data reliability and consistency in multi-user and concurrent read/write environments.

Delta files is also found in the Delta lake offer Time Travel and incremental processing. The time travel allows you to access historical versions. This allows is useful for rollbacks, auditing or analysing from a key specific point. The format is designed to handle incremental data loads. The files maintains the metadata about the added or modified data. They are not to be confused with Parquet format.

Parquet

Apache Parquet is an open source, column-oriented data file format designed for efficient data storage and retrieval. It is a language-agnostic format that supports complex data types and advanced nested structures. Parquet is highly efficient in data compression, decompression and supports complex data types and advanced nested data structures. It is good for storing big data of any kind (structured data tables, images, videos, documents). It is the ideal solution for full loads but lacks the incremental load format of the Delta format.

Options

So now you’d had an overview of the Microsoft Fabric offering, how do you know if it is the option for you. Whilst it is new and exciting what other options are available?

The first question to review is do you need all the elements of Fabric. This question is answered by reviewing the status of your data. As part of our services we review using the Management Information Health Check.

Microsoft Fabric is placed to support Big Data. You need to be in position where a Microsoft capacity cost model is the correct for your business.

An alternative option would be to mix and match the individual components. Utilising an ETL tool, data storage (data warehouse or data lake) and a visualisation. Some examples are below:

| ETL | Data Storage | Visualisation |

| SSIS Azure Data Factory (ADF) Talend Apache

|

SQL Server Big Query Azure Synapse Google Cloud |

Power BI Qlik Tableau Google data Studio

|

Competition

An alternative could be to use a similar integrated environments. These include Databricks and Snowflake. More details of these are below.

Databricks

Databricks is a cloud-based platform designed for big data analytics and machine learning. It was founded by the creators of Apache Spark. It is developed to provide a similar integrated environment Microsoft Fabric. The integrated environment that focusses on data engineering, data science, and business analytics.

Key Features of Databricks:

Databricks has become a popular choice for organizations seeking a unified and collaborative platform. It provides a platform for big data analytics and machine learning. Its architecture, support for various programming languages, as well as integration with Apache Spark make it a powerful tool.

1. Unified Workspace:

Databricks offers a unified workspace where data engineers, data scientists, and analysts can collaborate. This shared environment fosters teamwork and streamlines the process of working with big data.

2. Apache Spark Integration:

Databricks is built on Apache Spark, an open-source, distributed computing system. This allows users to leverage the full capabilities of Spark for processing and analysing large datasets.

3. Notebooks:

The platform supports interactive and collaborative coding through notebooks. Users can write code in various languages such as Scala, Python, SQL, and R, making it versatile for different data processing and analysis tasks.

4. Automated Cluster Management:

Databricks automates the management of computing clusters. This helps in optimizing resource utilization and reducing operational overhead.

5. Machine Learning (ML) and AI Capabilities:

Databricks provides integrated machine learning libraries and tools. This enables data scientists to build, train, and deploy machine learning models within the platform.

6. Data Visualization:

The platform supports interactive and customizable data visualization using tools like matplotlib, seaborn, and others. This allows users to create insightful visualizations to better understand their data.

7. Security and Collaboration:

Databricks incorporates robust security features to ensure the protection of sensitive data. It also provides collaboration features such as version control, sharing of notebooks, and role-based access control for efficient teamwork.

8. Integration with Data Lakes:

Databricks integrates with popular cloud-based data lakes such as Delta Lake, Amazon S3, and Azure Data Lake Storage. This allows users to ingest, process, and analyse data stored in these repositories.

9. Streaming Analytics:

Databricks supports real-time data processing and analytics through its integration with Spark Streaming. This is crucial for applications that require immediate insights from streaming data sources.

10. Data Engineering Workflows: Users can design and automate complex data engineering workflows using Databricks, making it an efficient platform for data preparation, transformation, and cleansing tasks.

Snowflake

Snowflake is a cloud-based data warehousing platform. It provides a fully managed and scalable solution for storing and analysing large volumes of data. Snowflake is designed to be a cloud-native and multi-cluster. This enables users to work with their data without the need for complex infrastructure management.

Key Features of Snowflake Data Warehouse:

1. Cloud-Native Architecture:

Snowflake operates in the cloud, leveraging the infrastructure of popular cloud service providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). This ensures scalability, flexibility, and ease of use without the need for physical hardware.

2. Multi-Cluster, Multi-Cluster, Multi-Cloud Support:

Snowflake supports multi-cluster, allowing workloads to run concurrently without impacting each other’s performance. It is also multi-cloud compatible, enabling users to deploy Snowflake on their preferred cloud provider.

3. Separation of Compute and Storage:

One of Snowflake’s distinctive features is the separation of compute and storage. This architecture allows users to scale these resources, optimizing performance and cost based on their specific needs.

4. Virtual Data Warehouse:

Snowflake provides users with a virtual data warehouse, allowing them to execute SQL queries on large datasets. This eliminates the need for physical hardware and simplifies the management of computational resources.

5. Automatic Scaling:

Snowflake scales resources based on the workload, automatically adjusting to handle varying levels of data processing demands. This ensures optimal performance and cost efficiency.

6. Data Sharing:

Snowflake enables secure and controlled data sharing between organizations. Users can share read-only or read-write access to specific datasets without the need for complex data copying or replication.

7. Concurrency Control:

The platform supports high levels of concurrency, allowing users to execute queries simultaneously without compromising performance. This is crucial for environments with heavy analytical workloads.

8. Built-in Security:

Snowflake incorporates robust security measures, including end-to-end encryption, access controls, and authentication protocols. This ensures that data remains secure throughout its lifecycle.

9. Data Integration:

Snowflake integrates with various data integration tools, making it easier for organizations to ingest, transform, and load data from various sources into the data warehouse. This seamless integration streamlines the data pipeline.

10. Time-Travel and Fail-Safe:

Snowflake provides features like time-travel and fail-safe, allowing users to recover from accidental changes or deletions in their data by accessing historical versions of the database.

Snowflake has gained popularity for its simplicity, scalability, and flexibility. This makes it an attractive option for businesses looking to modernize their data analytics infrastructure. It is cloud-native architecture and features support a wide range of data processing and analytical tasks. It makes Snowflake a valuable tool for organizations dealing with large and diverse datasets.

Conclusion

This blog has explained the parts of the Microsoft Fabric Offering. It has also explained its competition and given details on these namely Databricks and Snowflake.

Contact us if you want to find out more or discuss references from our clients.

Find out about our Business Intelligence Consultancy Service.

Or find other useful SQL, Power BI or other business analytics timesavers in our Blog

Our Business Analytics Timesavers are selected from our day to day analytics consultancy work. They are the everyday things we see that really help analysts, SQL developers, BI Developers and many more people. Our blog has something for everyone, from tips for improving your SQL skills to posts about BI tools and techniques. We hope that you find these helpful!

Blog Posted by David Laws